[three]Bean

PyCon Report, 2016

Jun 06, 2016 | categories: python, fedora, pycon View CommentsLike in previous years, a few of us from Fedora were at PyCon US in Portland Oregon for the week. The conference is over now (I'm sticking around for a day to explore the Pacific Northwest). Here are some of the highlights from the talks attended and the community sprint days:

Talks worth checking out

- K Lars Lohn's final keynote was out of control. None of us were ready for it. It wasn't even about python but I know everyone loved it. Parisa Tabriz's keynote on hacker mindset was very good (she's the "security princess" at Google) and Guido van Rossum's keynote on the state of python wandered off into an interesting autobiography about what made Python possible. If you're interested in software architecture, the Wednesday morning keynote on Plone and Zope by @cewing was an interesting overview of the evolution of that stack.

- Alex Gaynor's talk on automation for dev groups, cleverly titled "The cobbler's children have no shoes" was close to my heart. There was a salient point in the Q&A section about how while we often focus on automating workflows that are somehow problematic, sometimes that problem is a deeper social one. Automation can surface and inadvertantly exacerbate a tension between groups that have friction.

- For web development stuff, three talks are worth highlighting: @callahad of Mozilla (who is an awesome person) gave a talk on new mobile web technologies, Service Workers, Push, and App Manifests. It's worth a listen for people in the Fedora and Red Hat infrastructure ecosystem. @dshafik of Akamai gave a super interesting talk on HTTP/2 and the consequences for web devs. The short of it is that we have all these hacks in place that have become "best practice" over the years (sprite sheets, compressed and concatenated assets, bloated collection REST responses), none of which are necessary or desirable when we have HTTP/2 ready to go server-side. Sixty percent of browsers are ready to consume HTTP/2 apps and its all backwards compatible. Definitely worth looking into. Last but not least, if you do wev development, check out Sumana's talk titled "HTTP can do that?!" which goes over how to get the most out of HTTP/1 (something we've not always been the best at doing) -- very engaging.

- If you watch any of the talks here, check out Larry Hasting's talk on removing python's global interpreter lock. It's important if you use the language, deal with performance issues, and especially if you write C extensions. If none of those are you -- the details of the interpreter implementation are still super interesting. #gilectomy

- Of course the hallway track was the most valuable. I had good talks with @goodwillbits, @lvh, @sils1297, and too many others to mention.

For the community code sprints, I hacked with a couple other people on the test suite for koji which is the build system used by Fedora and many other RPM-based Linux distributions. We have a lot of web services and systems that go into producing the distro. Koji was one of the first that was written back in the day and it is starting to show its age. Getting test coverage up to a reasonable state is a pre-requisite for further refactoring (porting to python3, making it more modular, faster, etc..).

- https://pagure.io/koji/pull-request/93

- https://pagure.io/koji/pull-request/94

- https://pagure.io/koji/pull-request/95

- https://pagure.io/koji/pull-request/96

- https://pagure.io/koji/pull-request/98

- https://pagure.io/koji/pull-request/99

- https://pagure.io/koji/pull-request/100

- https://pagure.io/koji/pull-request/101

- https://pagure.io/koji/pull-request/102

- https://pagure.io/koji/pull-request/103

- https://pagure.io/koji/pull-request/104

- https://pagure.io/koji/pull-request/105

- https://pagure.io/koji/pull-request/106

- https://pagure.io/koji/pull-request/107

- https://pagure.io/koji/pull-request/108

Huge shoutout to Sijis Aviles, Joel Vasallo, and Robert Belozi for slogging through it with me. We had fun!

Backend rewrite of the Fedora-Packages webapp

Nov 20, 2015 | categories: python, cache, fedora View CommentsJust yesterday, we deployed the 3.0 version -- a rewrite -- of the fedora-packages webapp (source). For years now, it has suffered from data corruption problems that stemmed from multiple processes all fighting over resources stored on a gluster share between the app nodes. Gluster's not to blame. It's that too many things were trying to be "helpful" and no amount of locking would seem to solve the problem.

tl;dr

We have lots of old, open tickets about various kinds of data being missing from the webapp. Those are hopefully all resolved now. Please use it and file new tickets if you notice bugs. Patience appreciated.

Architecture

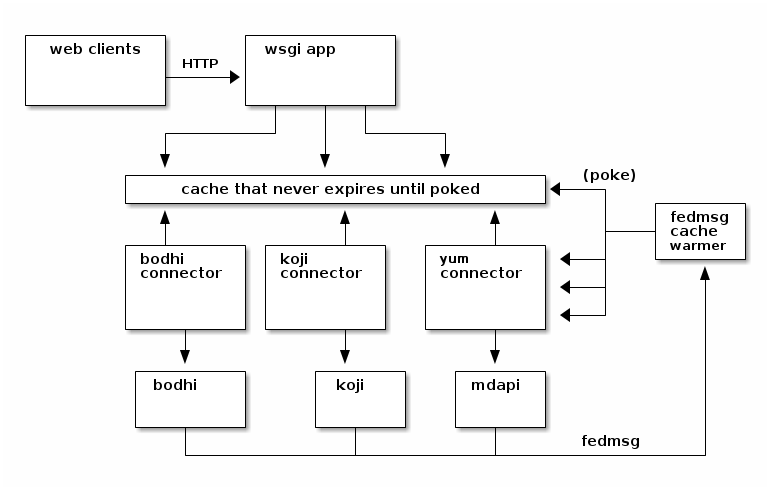

Let's take a look at the internal architecture of the app. It's a cool idea. It doesn't really have any data of its own, but it is a layer on top of our other packaging apps; it just re-presents all of their data in one place. This is the "microservices" dream?

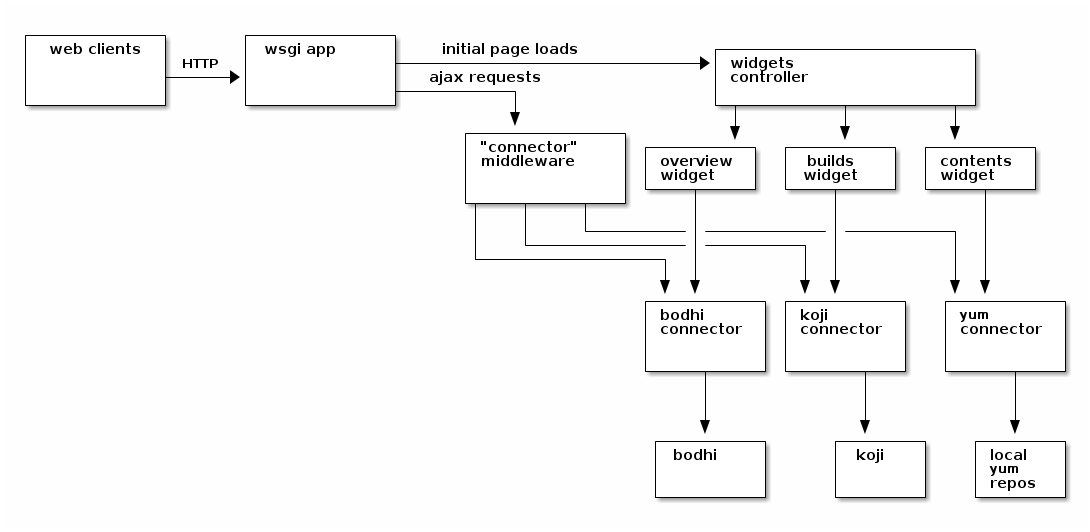

Here we have a diagram of the system as it was originally written in its "2.0" state.

HTTP requests come in to the app either for some initial page load or for some kind of subsequent ajax data. The app hands control off to one of two major subsystems -- a "widgets" controller that handles rendering all the tabs, and a "connectors" dispatcher that handles gathering and returning data. The widgets themselves actually re-use the connectors under the hood to prepare their initial data.

More complicated than that

First, there are only three widgets/connectors depicted above, but really there are many more (a search connector, a bugzilla connector, etc..). Some of them were written, but never included any place in the app (in the latest pass through the code, I found an unused TorrentConnector which returned data about Fedora torrent downloads!).

Note that over the last few years, the widgets subsystem has remained largely unchanged. It is a source of technical debt, but it hasn't been the cause of any major breakages, so we haven't had cause to touch it. The widgets have metaclasses under the hood, can be nested into a hierarchy, and can declare js/css resource dependencies in a tree. It's pretty massive -- all on the server-side.

There is also (not depicted) an impenetrable thicket of javascript that gets served to clients which in turn wires up a lot of the client-side behavior.

Lastly, there are (were) a variety of cronjobs (not depicted) which would update local data for a subset of the connectors. Notably, there was a yum-sync cronjob that would pull the latest yum repodata down to disk. There was a cronjob that would pull down all the latest koji builds "since the last time it ran". Another would crawl through the local yum repos and rebuild the search index based on what it thought was in rawhide.

Just... keep all that in mind.

Focus on the connectors

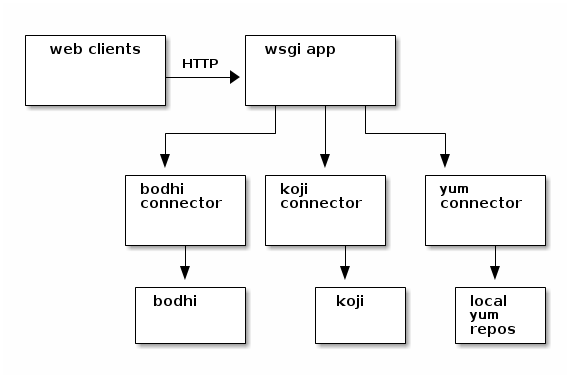

Here's a simpler drawing:

So, when it was first released, this beast was too slow. The koji connector would take forever to return.. and bugzilla even longer.

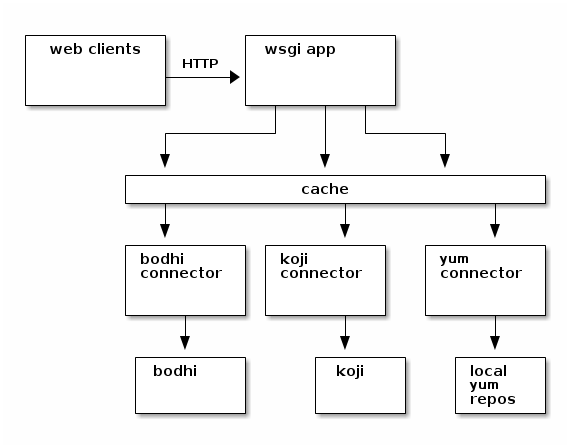

To try and make things snappy, I added a cache layer internally, like this:

The "connector middleware" and the widget subsystem would both use the cache, and things became somewhat more nice! However, the cache expiry was too long, and people complained (rightly) that the data was often out of date. So, we reduced it and had the cache expire every 5 minutes. But.. that defeated the whole point. Every time you requested a page, you were almost certainly guaranteed that the cache would already be expired and you'd have to wait and wait for the connectors to do their heavy-lifting anyways.

Async Refresh

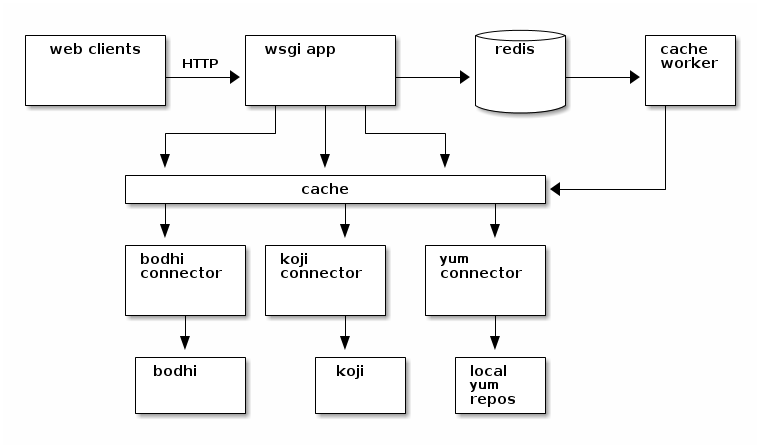

That's when (back in 2013), I got this idea to introduce an asynchronous cache worker, that looked something like this.

If you requested a page and the cache data was too old, the web app would just return the old data to you anyways, but it would also stick a note in a redis queue telling a cache worker daemon that it should rebuild that cache value for the next request.

I thought it was pretty cool. You could request the page and sometimes get old data, but if you refreshed shortly after that you'd have the new stuff. Pages that were "hot" (being clicked on by multiple people) appeared to be kept fresh more regularly.

However, a page that was "cold" -- something that someone would visit once every few months -- would often present horribly old information to the requester. People frequently complained that the app was just out of sync entirely.

To make matters worse, it was out of sync entirely! We had a separate set of issues with the cron jobs (the one that would update the list of koji builds and the one that would update the yum cache). Sometimes, the webapp, the cache worker, and the cronjob would all try to modify the same files at the same time and horribly corrupt things. The cronjob would crash, and it would never go back to find the old builds that it failed to ingest. It was a mess.

The latest rewrite

Two really good decisions were made in the latest rewrite:

First, we dispensed entirely with the local yum repos (which were the resources most prone to corruption). We moved that out to an external network service called mdapi which is very cool in its own right, but it makes the data story much more simple for the fedora-packages app.

Second, I replaced the reactive async cache worker with an active event-driven cache worker. Instead of updating the cache when a user requests the page, we update the cache when the resources change in the system we would query. For example, when someone does a new build in the buildsystem, the buildsystem publishes a message to our message bus. The cache worker receives that event -- it first deletes the old JSON data for the builds page for that package in the cache, and then it calls the KojiConnector with the appropriate arguments to re-fill that cache value with the latest data.

We turned off expiration in the cache all-together so that values never expire on their own. The outcome here is that the page data should be freshly cached before anyone requests it -- active cache invalidation.

With those two changes, we were able to kill off all of the cronjobs.

Some additional complications: first, the cache worker also updates a local xapian database in response to events (in addition to the expiration-less cache), but it is the only process doing so and so can hopefully avoid further corruption issues.

Second, the bugzilla connector can't work like this yet because we don't yet have bugzilla events on our message bus. Zod-willing, we'll have them in January 2016 and we can flip that part on. The bugs tab will be slower than we like until then. UPDATE: We got bugzilla on the bus at the end of March, 2016.

Looking forwards

We're building the fedora-hubs backend with the same kind of architecture (actively-invalidated cache of tough-to-assemble page data), so, we get to learn practical lessons here about what works and what doesn't.

Do hit us up in #fedora-apps on freenode if you want to help out, chat, or lurk. I'll be cleaning up any loose bugs on this deployment in the coming weeks while starting work on a new pdc-updater project.

Upcoming Python3 Porting vFAD

Nov 02, 2015 | categories: python, fedora View CommentsAll thanks to Abdel Martínez and Matej Stuchlik, we're going to be holding a (virtual) international "Fedora Activity Day" for Python 3 porting, and it is going to be amazing. Save the date -- November 14th and 15th

Things to consider:

- If you haven't heard, 2016 is going to be the year of Python3 on the desktop, so...

- If you don't know what you're doing with Python3 porting, don't sweat it. If you want to learn, come join and we'll try to teach you along the way.

- If you don't know how to submit patches upstream, don't sweat it. If you want to learn, come join and we'll try to teach you along the way.

- If you want to hack with us, add your info to the wiki page. We'll be hanging out in a opentokrtc channel and in #fedora-python on freenode. See the details.

- We have a really cool webapp that Petr Viktorin put together. It tracks the status of different packages in Fedora and upstream so we can coordinate more effectively about what needs to be done.

- If you want to get people in your city together, that can make it more fun. You can join the video chat as a group! The EMEA crew will be online from the Pycon CZ 2015 sprints (cool). There are a couple people from my local Python User Group that want to join in.. although we're still searching for a reasonable place to meet up. I plan to be around starting at 18:00 UTC both days, although I bet EMEA crew will be online much earlier.

Happy Hacking

Speeding up that nose test suite.

Jun 23, 2015 | categories: python, fedmsg, fedora, nose View CommentsShort post: I just discovered the --with-prof option to the nosetests command. It profiles your test suite by using the hotshot module from the Python standard library and it found a huge sore spot in my most frequently run suite. In this pull request we got the fedmsg_meta running 31x faster.

Compare before:

(fedmsg_meta)❯ time $(which nosetests) -x Ran 3822 tests in 270.822s OK (SKIP=1638) ---------------------------------------------------------------------- Success! $(which nosetests) -x 267.30s user 1.32s system 98% cpu 4:33.53 total

And after:

(fedmsg_meta)❯ time $(which nosetests) -x Ran 3822 tests in 5.982s OK (SKIP=1638) ---------------------------------------------------------------------- Success! $(which nosetests) -x 3.87s user 0.71s system 52% cpu 8.700 total

That test suite used to take forever. It's the whole reason I wrote nose-audio in the first place!

PyCon 2015 (Part II)

Apr 21, 2015 | categories: python, fedora, pycon View CommentsI wrote last week about how a few of us from Fedora were at PyCon US in Montreal for the week. It's all over and done with and we're back home now (I got a flu-like bug for the second time this season on the way home...) So, these are just some quick notes on what I did at the sprints!

Early on, I ported python-fedora to python3 and afterwards bkabrda picked up the torch and ported fedmsg and the expansive fedmsg_meta module. The one thing standing in the way of full-on python3 fedmsg is the M2Crypto library which will probably not see python3 compatibility anytime soon. Slavek courageously ported half of fedmsg's crypto stack to the python3-compatible cryptography library only to find that it didn't support the other half of the equation. We're keeping those changes in a branch until that gets caught up.

The most exciting bit was helping Nolski with his tool that puts fedmsg notifications on the OSX desktop. It totally works. Crazy, right?

Later in the week, I helped decause a bit with his new cardsite app. Load it up and let it run for a while. It's yet another neat way to visualize the activity of the Fedora community in realtime.

I started a prototype of fedora-hubs which doesn't do much but display little dummy widgets, but it is useful for reflecting on how the architecture ought to work.

I wrote some code to get the fedmsg-notify desktop tool to pull its preferences from the FMN service. The changes work, but they required some server-side patches to FMN that are done, but haven't yet been rolled out to production (and we're in freeze for the Beta release anyways..).

In order to use your FMN preferences, you currently have to set a gsettings value by hand which is unacceptable and gross, but I'm not sure how to present it in the config UI. We can't just go all-in with FMN because there are other distros out there (Debian) which use fedmsg-notify but which don't run their own FMN service. We'll have to think on it and let it sit for a while.

Lastly, Bodhi2 saw some good work. (We fixed some bugs that needed to be hammered out before release and we actually have an RPM and installed it on a cloud node! Staging will be coming next once some el7 compat deps get sorted out.)

ncohglan introduced us to the authors of kallithea which led to some conversations about pagure and where we can collaborate.

I was really glad to meet sijis for fun-time late-night hackery in the hotel lobby.

That's all I can remember. It was a whirlwind, as always. Happy Hacking!

Next Page »