[three]Bean

Datanommer database migrated to a new db host

Sep 29, 2014 | categories: fedmsg, datanommer, fedora View CommentsThe bad news: our database of all the fedmsg messages ever - it's getting slower and slower. If you use datagrepper to query it with any frequency, you will have noticed this. The dynamics of it are simple: we are always storing new messages in postgres and none of them ever get removed. We're over 13 million messages now.

When we first launched datagrepper for public queries, they took around 3-4s to complete. We called this acceptable, and even fast enough to build services that relied extensively on it like the releng dashboard. As things got slower, queries could take around 60s and pages like the releng dash that made 20 some queries to it would take a long time to render fully.

Three Possible Solutions

We started looking at ways out of this jam.

NoSQL -- funny, the initial implementation of datanommer written on top of mongodb and we are now re-considering it. At Flock 2014, yograterol gave a talk on NoSQL in Fedora Infra which has sparked off a series of email threads and an experiment by pingou to import the existing postgres data into mongo. The next step there is to run some comparison queries when we get time to see if one backend is superior to the other in respects that we care about.

Sharding -- another idea is to horizontally partition our giant messages table in postgres. We could have one hot_messages table that holds only the messages from the last week or month, another warm_messages table that holds messages from the last 6 months or so, and a third cold_messages table that holds the rest of the messages from time immemorial. In one version of this scheme, no messages are duplicated between the tables and we have a cronjob that periodically (daily?) moves messages from hot to warm and from warm to cold. This is likely to increase performance for most use cases, since most people are only ever interested in the latest data on topic X, Y or Z. If someone is interested in getting data from way back in time, they typically also have the time to sit around and wait for it. All of this, of course, would be an implementation detail hidden inside of the datagrepper and datanommer packages. End users would simply query for messages as they do now and the service would transparently query the correct tables under the hood.

PG Tools -- While looking into all of this, we found some postgres tools that might just help our problems short term (although we haven't actually gotten a chance to try them yet). pg_reorg and pg_repack look promising. When preparing to test other options, we pulled the datanommer snapshot down and imported it on a cloud node and found, surprisingly, that it was much faster than our production instance. We also haven't had time to investigate this, but my bet is that when it is importing data for the first time, postgres has all the time in the world to pack and store the data internally in a way that it knows how to optimally query. This needs more thought -- if simple postgres tools will solve our problem, then we'll save a lot more engineering time than if we try to rewrite everything against mongo or rewrite everything against a cascade of tables (sharding).

Meanwhile

Doom arrived.

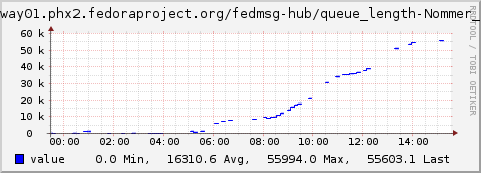

The above is a graph of the datanommer backlog from last week. The number on the chart represents how many messages have arrived in datanommer's local queue, but which have not been processed yet and inserted into the database. We want the number to be at 0 all the time -- indicating that messages are put into the database as soon as they arrive.

Last week, we hit an emergency scenario -- a critical point -- where datanommer couldn't store messages as fast as they were arriving.

Now.. what caused this? It wasn't that we were receiving messages at a higher than normal rate, it was that the database's slowness passed a critical point, the process of inserting a message was now taking too long, the rate at which we were processing messages was lower than necessary (see my other post on new measurements).

Migration

The rhel6 db host for datanommer was pegged so we decided to try and migrate it to a new rhel7 vm with more cpus as a first bet. This turned out to work much better than I expected.

Times for simple queries are down from 500 seconds to 4 seconds and our datanommer backlog graph is back down to zero.

The migration took longer than I expected and we have an unfortunate 2 hour gap in the datanommer history now. With some more forethought, we could get around this by standing up a temporary second db to store messages during the outage, and then adding those back to the main db afterwards. If we can get some spare cycles to set this up and try it out, maybe we can have it in place for next time.

For now, the situation seems to be under control but is not solved in the long run. If you have any interest in helping us with any of the three possible longer term solutions mentioned above (or have ideas about a fourth) please do join us in #fedora-apps on freenode and lend a hand.

Fedmsg Health Dashboard

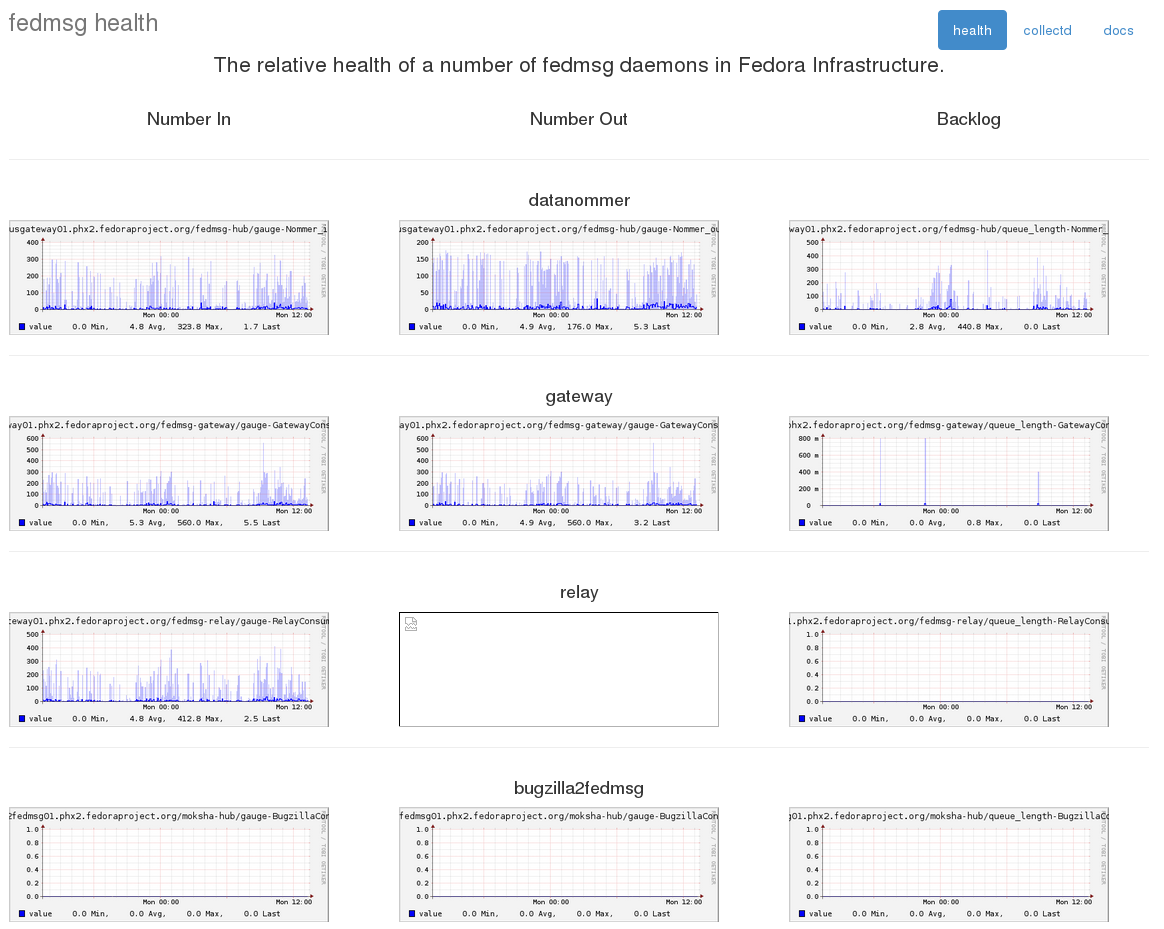

Sep 29, 2014 | categories: fedmsg, fedora, collectd View CommentsWe've had collectd stats on fedmsg for some time now but it keeps getting better. You can see the new pieces starting to come together on this little fedmsg-health dashboard I put together.

The graphs on the right side -- the backlog -- we have had for a few months now. The graphs on the left and center indicate respectively how many messages were received by and how many messages were processed by a given daemon. The backlog graph on the right shows a sort of 'difference' between those two -- "how many messages are left in my local queue that need to be processed".

Having all these will help to diagnose the situation when our daemons start to become noticably clogged and delayed. "Did we just experience a surge of messages (i.e. a mass rebuild)? Or is our rate of handling messages slowing down for some reason (i.e., slow database)?"

This is mostly for internal infrastructure team use -- but I thought it would be neat to share.

Fedora Notifications, 0.3.0 Release

Sep 16, 2014 | categories: notifications, fedmsg, fedora View CommentsJust as a heads up, a new release of the Fedora Notifications app (FMN) was deployed today (version 0.3.0).

Frontend Improvements

Negated Rules - Individual rules (associated with a filter) can now be negated. This means that you can now write a rule like: "forward me all messages mentioning my username except for meetbot messages and those secondary arch koji builds."

Disabled Filters - Filters can now be disabled instead of just deleted, thus letting you experiment with removing them before committing to giving them the boot.

Limited Info - The information on the "context" page is now successively revealed. Previously, when you first visited it, you were presented with an overwhelming amount of information and options. It was not at all obvious that you had to 'enable' a context first before you could receive messages. It was furthermore not obvious that even if you had it enabled, you still had to enter an irc nick or an email address in order for things to actually work. It now reveals each section as you complete the preceding ones, hopefully making things more intuitive -- it warns you that you need to be signed on to freenode and identified for the confirmation process to play out.

Truncated Names - Lastly and least, on the "context" page, rule names are no longer truncated with a ..., so you can more easily see the entirety of what each filter does.

Backend Improvements

Group Maintainership - There's a new feature in pkgdb as of this summer: groups can now be maintainers of packages. I.e. the perl-sig group can own a number of core perl packages. FMN didn't know to route messages about such packages to you if you're a member of the perl-sig group, but now it does.

Automatic Accounts - And lastly, with an eye to the future, when new FAS accounts are added to the packager group, FMN notices this and creates them an FMN account turned on by default. (This is in preparation for the upcoming switch of FMN from opt-in to opt-out for packagers.)

Clock Skew - There's a feature where the IRC backend will try to tell you how delayed it was in delivering your message. If your koji build finishes while FMN is busy with other stuff, when it finally gets to your message it will tell you "your koji build finished 2 minutes ago". There was a little cosmetic bug in this where, due to clock skew between servers, FMN would tell you that "your koji build finished 2 seconds in the future".. confusing! This release fixes that.

Cache Locking - The backend keeps an in-memory cache of everyone's notification preferences (which it intelligently invalidates based on fedmsg activity). Some optimization improvements were made on the thread locking around that cache.

Thanks for all the RFEs and bugs filed so far. Happy Hacking!

github + fedmsg, sign up your repos to feed the bus

Jun 18, 2014 | categories: fedmsg, github, fedora View CommentsI'm proud to announce a new web service that bridges upstream GitHub repository activity into the fedmsg bus.

Please check it out and let us know of any bugs you find. The regular fedmsg topics documentation shows examples of just what kinds of messages get rebroadcasted, so you can read all about that there if you like. The site has a neat dashboard that let's you toggle all of your various repos on and off -- you have control over which of your projects get published and which don't.

I know there's concern out there about building any kind of dependency between our infrastructure and proprietary stacks like GitHub, Inc. This new GitHub integration was a quick-win because we could do it 1) securely, (unlike with transifex), 2) with a low-maintenance, self-service dashboard, and 3) lots of our upstreams are on github or have mirrors on github. If you have another message source out there that you'd like to bridge in, drop into #fedora-apps and let me know. We'll see if we can figure something out that will work.

Anyways.. we should probably make some Fedora Badges for upstream development activity now, right? And, as a heads up, be on the lookout for new jenkins and bugzilla integration... coming soon.

Happy Hacking!

Optimizing "Fedora Notifications"

Jun 13, 2014 | categories: fmn, fedmsg, fedora View CommentsBack story: with some hacks, we introduced new monitoring for fedmsg.

The good news is, most everything was smooth sailing.. with one exception. FMN has been having a terrible time staying on top of its workload. After we brought the ARM Koji hub onto the fedmsg bus, FMN just started to drown.

Bad News Bears

Here's a depiction of its "queue length" or backlog -- the number of messages it has received, but has yet to process (We want that number to be as low as possible, such that it handles messages as soon as they arrive.)

You read it right--that's hundreds of thousands of messages still-to-process. You wouldn't get your messages until the day-after and besides which, FMN would never get it under control. The backlog would just continue to pile-up until we restarted it, dropping all buffered messages.

Optimizing

We introduced a couple improvements to the back-end code which have produced favorable results.

Firstly, we rewrote the pkgdb caching mechanism twice over. It is typical for users to have a rule in their preferences like "notify me of any event having anything to do with a package that I have commit access on". That commit access data is stored in pkgdb (now pkgdb2). In order for that to work, the FMN back-end has to query pkgdb for what packages you have commit access on.

In the first revision, we would cache the ACLs per-user. Our call to pkgdb2 to get all that information per-user took around 15 seconds to execute and we would cache the results for around 5 minutes. This became untenable quickly: the closer we grew to 20 test users, we ended up just querying pkgdb all day. Math it out: 20 users, 15 seconds per query. 5 minutes to cache all 20 users, and by that time, the cache of the first user had expired so we had to start over.

We moved temporarily to caching per-package, which was at least tenable.

Secondly, we (smartly, I think) reorganized the way the FMN back-end manages its own database. In the first revision of the stack, every time a new message would arrive FMN would:

- query the database for every rule and preference for every user,

- load those into memory,

- figure out where it should forward the message,

- do that, and then drop the rules and preferences (only to load them again a moment later).

Nowadays, we cache the entire ruleset in memory (forever). The front-end is instrumented to publish fedmsg messages whenever a user edits something in their profile -- when the back-end receives such a message, it invalidates its cache and reloads from the database (i.e., infrequently and as-needed).

We ended up applying the same scheme to the pkgdb caching, such that whenever a message arrives indicating that someone's ACLs have changed somewhere in pkgdb, we delete that portion of our local cache for that user, which is then refreshed the next time it is needed.

Things get better

Take a look now:

It still spikes when major events happen, but it quickly gets things under control again. More to come... (and, if you love optimizing stuff and looking for hotspots, please come get involved; there are too many things to hack on).

Join the Fedora Infrastructure Apps team in #fedora-apps on freenode!

« Previous Page -- Next Page »