[three]Bean

statscache - thoughts on a new Fedora Infrastructure backend service

May 26, 2015 | categories: fedora, datagrepper, statscache View CommentsWe've been working on a new backend service called statscache. It's not even close to done, but it has gotten to the point that it deserves an introduction.

A little preamble: the Fedora Infrastructure team runs ~40 web services. Community ongoings are channeled through irc meetings (for which we have a bot), wiki pages (which we host), and more. Packages are built in our buildsystem. QA feedback goes through lots of channels, but particularly the updates system. There are lots of systems with many heterogenous interfaces. About three years ago, we started linking them all together with a common message bus on the backend and this has granted us a handful of advantages. One of them is that we now have a common history for all Fedora development activity (and to a lesser extent, community activity).

There is a web interface to this common history called datagrepper. Here are some example queries to get the feel for it:

- all messages from the last five minutes

- all karma commands from IRC

- all messages about the package firefox

On top of this history API, we can build other things -- the first of which was the release engineering dashboard. It is a pure html/js app -- it has no backend server components of its own (no python) -- it directs your browser to make many different queries to the datagrepper API. It 1) asks for all the recent message types for X, Y, and Z categories, 2) locally filters out the irrelevant ones, and 3) tries to render only the latest events.

It is arguably useful. QA likes it so they can see what new things are available to test. In the future, perhaps the websites team can use it to get the latest AMIs for images uploaded to amazon, so they can in turn update getfedora.org.

It is arguably slow. I mean, that thing really crawls when you try to load the page and we've already put some tweaks in place to try to make it incrementally faster. We need a new architecture.

Enter statscache. The releng dash is pulling raw data from the server to the browser, and then computing some 'latest values' from there to display. Why don't we compute and cache those latest values in a server-side service instead? This way they'll be ready and available for snappy delivery to web clients and we won't have to stress out the master archive DB with all those queries trawling for gems.

@rtnpro and I have been working on it for the past few months and have a nice basis for the framework. It can currently cache some rudimentary stuff and most of the releng-dash information, but we have big plans. It is pluggable -- so if there's a new "thing you want to know about", you can write a statscache plugin for it, install it, and we'll start tracking that statistics over time. There are all sorts of metrics -- both the well understood kind and the half-baked kind -- that we can track and have available for visualization.

We can then plug those graphs in as widgets to the larger Fedora Hubs effort we're embarking on (visit the wiki page to learn about it). Imagine user profile pages there with nice d3.js graphs of personal and aggregate community activity. Something in the style of the calendar of contributions graph that GitHub puts on user profile pages would be a perfect fit (but for Fedora activity -- not GitHub activity).

Check out the code:

- the main repo

- the plugins repo

At this point we need:

- New plugins of all kinds. What kinds of running stats/metrics would be interesting?

- By writing plugins that will flex the API of the framework, we want to find edge cases that cannot be easily coded. With those we can in turn adjust the framework now -- early -- instead of 6 months from now when we have other code relying on this.

- A set of example visualizations would be nice. I don't think statscache should host or serve the visualization, but it will help to build a few toy ones in an examples/ directory to make sure the statscache API can be used sanely. We've been doing this with a statscache branch of the releng dash repo.

- Unit/functional test cases. We have some, but could use more.

- Stress testing. With a handful of plugins, how much does the backend suffer under load?

- Plugin discovery. It would be nice to have an API endpoint we can query to find out what plugins are installed and active on the server.

- Chrome around the web interface? It currently serves only JSON responses, but a nice little documentation page that will introduce a new user to the API would be good (kind of like datagrepper itself).

- A deployment plan. We're pretty good at doing this now so it shouldn't be problematic.

Revisiting Datagrepper Performance

Feb 27, 2015 | categories: fedmsg, datanommer, fedora, datagrepper, postgres View CommentsIn Fedora Infrastructure, we run a service somewhat-hilariously called datagrepper which lets you make queries over HTTP about the history of our message bus. (The service that feeds the database is called datanommer.) We recently crossed the mark of 20 million messages in the store, and the thing still works but it has become noticeably slower over time. This affects other dependent services:

- The releng dashboard and others make HTTP queries to datagrepper.

- The fedora-packages app waits on datagrepper results to present brief histories of packages.

- The Fedora Badges backend queries the db directly to figure out if it should award badges or not.

- The notifications frontend queries the db to try an display what messages in the past would have matched a hypothetical set of rules.

I've written about this chokepoint before, but haven't had time to really do anything about it... until this week!

Measuring how bad it is

First, some stats -- I wrote this benchmarking script to try a handful of different queries on the service and report some average response times:

#!/usr/bin/env python import requests import itertools import time import sys url = 'https://apps.fedoraproject.org/datagrepper/raw/' attempts = 8 possible_arguments = [ ('delta', 86400), ('user', 'ralph'), ('category', 'buildsys'), ('topic', 'org.fedoraproject.prod.buildsys.build.state.change'), ('not_package', 'bugwarrior'), ] result_map = {} for left, right in itertools.product(possible_arguments, possible_arguments): if left is right: continue key = hash(str(list(sorted(set(left + right))))) if key in result_map: continue results = [] params = dict([left, right]) for attempt in range(attempts): start = time.time() r = requests.get(url, params=params) assert(r.status_code == 200) results.append(time.time() - start) # Throw away the max and the min (outliers) results.remove(min(results)) results.remove(min(results)) results.remove(max(results)) results.remove(max(results)) average = sum(results) / len(results) result_map[key] = average print "%0.4f %r" % (average, str(params)) sys.stdout.flush()

The results get printed out in two columns.

- The leftmost column is the average number of seconds it takes to make a query (we try 8 times, throw away the shortest and the longest and take the average of the remaining).

- The rightmost column is a description of the query arguments passed to datagrepper. Different kinds of queries take different times.

This first set of results are from our production instance as-is:

7.7467 "{'user': 'ralph', 'delta': 86400}"

0.6984 "{'category': 'buildsys', 'delta': 86400}"

0.7801 "{'topic': 'org.fedoraproject.prod.buildsys.build.state.change', 'delta': 86400}"

6.0842 "{'not_package': 'bugwarrior', 'delta': 86400}"

7.9572 "{'category': 'buildsys', 'user': 'ralph'}"

7.2941 "{'topic': 'org.fedoraproject.prod.buildsys.build.state.change', 'user': 'ralph'}"

11.751 "{'user': 'ralph', 'not_package': 'bugwarrior'}"

34.402 "{'category': 'buildsys', 'topic': 'org.fedoraproject.prod.buildsys.build.state.change'}"

36.377 "{'category': 'buildsys', 'not_package': 'bugwarrior'}"

44.536 "{'topic': 'org.fedoraproject.prod.buildsys.build.state.change', 'not_package': 'bugwarrior'}"

Notice that a handful of queries are under one second but some are unbearably long. A seven second response time is too long, and a 44-second response time is way too long.

Setting up a dev instance

I grabbed the dump of our production database and imported it into a fresh postgres instance in our private cloud to mess around. Before making any further modifications, I ran the benchmarking script again on this new guy and got some different results:

5.4305 "{'user': 'ralph', 'delta': 86400}"

0.5391 "{'category': 'buildsys', 'delta': 86400}"

0.4992 "{'topic': 'org.fedoraproject.prod.buildsys.build.state.change', 'delta': 86400}"

4.5578 "{'not_package': 'bugwarrior', 'delta': 86400}"

6.4852 "{'category': 'buildsys', 'user': 'ralph'}"

6.3851 "{'topic': 'org.fedoraproject.prod.buildsys.build.state.change', 'user': 'ralph'}"

10.932 "{'user': 'ralph', 'not_package': 'bugwarrior'}"

9.1895 "{'category': 'buildsys', 'topic': 'org.fedoraproject.prod.buildsys.build.state.change'}"

14.950 "{'category': 'buildsys', 'not_package': 'bugwarrior'}"

12.044 "{'topic': 'org.fedoraproject.prod.buildsys.build.state.change', 'not_package': 'bugwarrior'}"

A couple things are faster here:

- No ssl on the HTTP requests (almost irrelevant)

- No other load on the db from other live requests (likely irrelevant)

- The db was freshly imported (the last time we moved the db server things got magically faster. I think there's something about the way that postgres stores stuff internally that when you freshly import the data, it is organized more effectively. I have no data or real know-how to support this claim though).

Experimenting with indexes

I first tried adding indexes on the category and topic columns of the messages table (which are common columns used for filter operations). We already have an index on the timestamp column, without which the whole service is just unusable.

Some results after adding those:

0.1957 "{'user': 'ralph', 'delta': 86400}"

0.1966 "{'category': 'buildsys', 'delta': 86400}"

0.1936 "{'topic': 'org.fedoraproject.prod.buildsys.build.state.change', 'delta': 86400}"

0.1986 "{'not_package': 'bugwarrior', 'delta': 86400}"

6.6809 "{'category': 'buildsys', 'user': 'ralph'}"

6.4602 "{'topic': 'org.fedoraproject.prod.buildsys.build.state.change', 'user': 'ralph'}"

10.982 "{'user': 'ralph', 'not_package': 'bugwarrior'}"

3.7270 "{'category': 'buildsys', 'topic': 'org.fedoraproject.prod.buildsys.build.state.change'}"

14.906 "{'category': 'buildsys', 'not_package': 'bugwarrior'}"

7.6618 "{'topic': 'org.fedoraproject.prod.buildsys.build.state.change', 'not_package': 'bugwarrior'}"

Response times are faster in the cases you would expect.

Those columns are relatively simple one-to-many relationships. A message has one topic, and one category. Topics and categories are each associated with many messages. There is no JOIN required.

Handling the many-to-many cases

Speeding up the queries that require filtering on users and packages is more tricky. They are many-to-many relations -- each user is associated with multiple messages and a message may be associated with many users (or many packages).

I did some research, and through trial-and-error found that adding a composite primary key on the bridge tables gave a nice performance boost. See the results here:

0.2074 "{'user': 'ralph', 'delta': 86400}"

0.2091 "{'category': 'buildsys', 'delta': 86400}"

0.2099 "{'topic': 'org.fedoraproject.prod.buildsys.build.state.change', 'delta': 86400}"

0.2056 "{'not_package': 'bugwarrior', 'delta': 86400}"

1.4863 "{'category': 'buildsys', 'user': 'ralph'}"

1.4553 "{'topic': 'org.fedoraproject.prod.buildsys.build.state.change', 'user': 'ralph'}"

1.8186 "{'user': 'ralph', 'not_package': 'bugwarrior'}"

3.5525 "{'category': 'buildsys', 'topic': 'org.fedoraproject.prod.buildsys.build.state.change'}"

10.9242 "{'category': 'buildsys', 'not_package': 'bugwarrior'}"

3.5214 "{'topic': 'org.fedoraproject.prod.buildsys.build.state.change', 'not_package': 'bugwarrior'}"

The best so far! That one 10.9 second query is undesirable, but it also makes sense: we're asking it to first filter for all buildsys messages (the spammiest category) and then to prune those down to only the builds (a proper subset of that category). If you query just for the builds by topic and omit the category part (which is what you want anyways) the query takes 3.5s.

All around, I see a 3.5x speed increase.

Rolling it out

The code is set to be merged into datanommer and I wrote an ansible playbook to orchestrate pushing the change out. I'd push it out now, but we just entered the infrastructure freeze for the Fedora 22 Alpha release. Once we're through that and all thawed, we should be good to go.

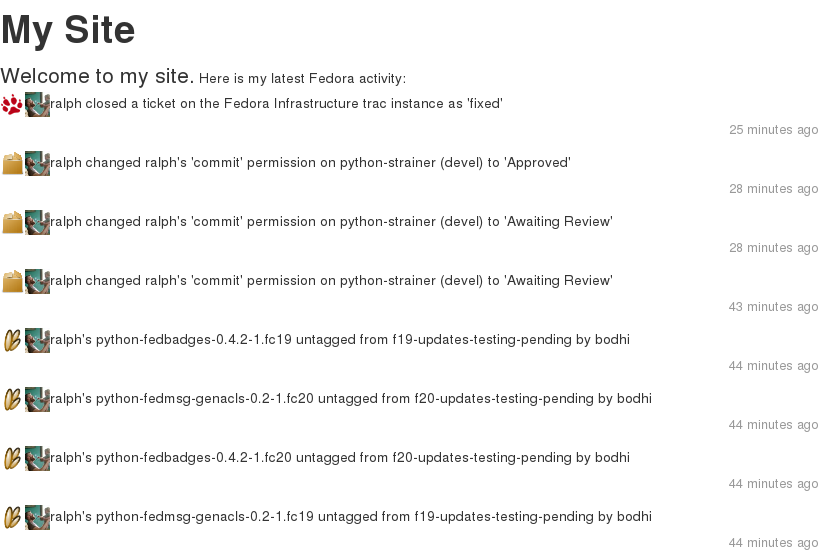

A fedmsg widget for your site

Apr 01, 2014 | categories: fedmsg, widgetry, fedora, datagrepper View CommentsNot an April Fool's joke: more cool fedmsg tools from the Fedora Infrastructure Team: the datagrepper app now provides a little self-expanding javascript widget that you can embed on your blog or website. I have it installed on my blog; if you look there (here?), it should show up on the right hand side of the screen.

Here's the example from the datagrepper docs:

<html>

<body>

<h1>My Site</h1>

<p class="lead">Welcome to my site.</p>

<p>Here is my latest Fedora activity:</p>

<script

id="datagrepper-widget"

src="https://apps.fedoraproject.org/datagrepper/widget.js?css=true"

data-user="ralph"

data-rows_per_page="40">

</script>

<footer>Happy Hacking!</footer>

</body>

</html>

Just copy-and-paste that into a file called testing-stuff.html on your machine, and then open that file in your browser. You should see something like this:

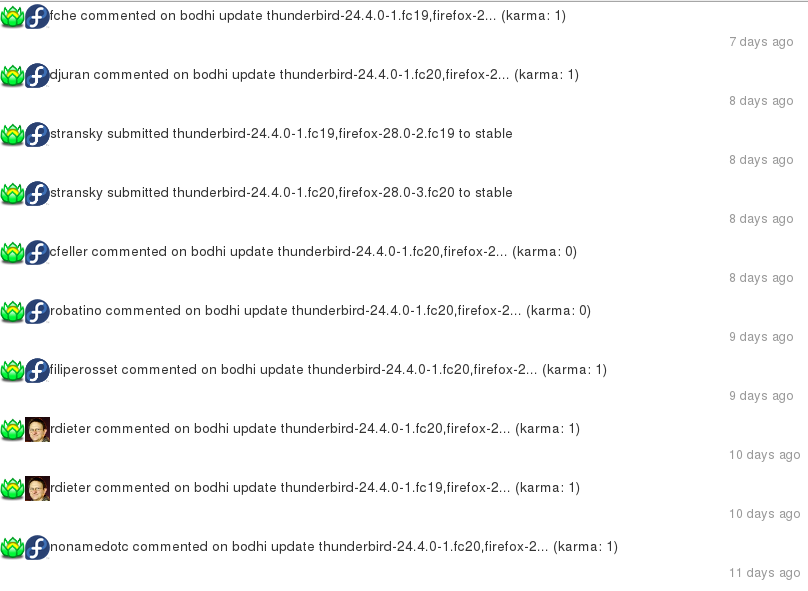

Like the docs say, you can change the data- attributes on the <script> tag to perform different kinds of queries. For instance, the following would render a widget showing only Bodhi events about the Firefox package:

<script id="datagrepper-widget" src="https://apps.fedoraproject.org/datagrepper/widget.js?css=true" data-category="bodhi" data-package="firefox" data-rows_per_page="20"> </script>

You might want to include such a thing on a status page for a project you're working on.

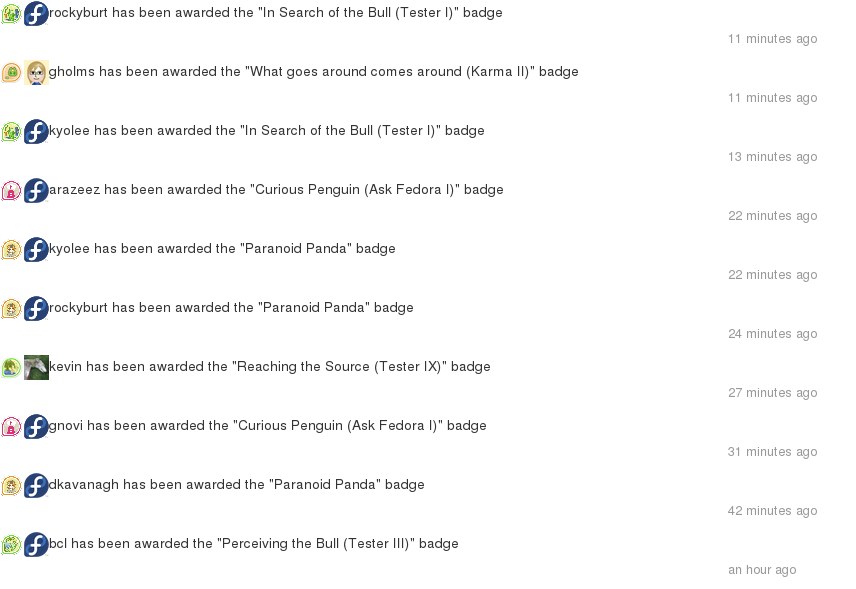

You can make queries about all the fedmsg topics (see the fedmsg docs for the full list), topics like org.fedoraproject.prod.fedbadges.badge.award which would render a feed of the latest Fedora Badges awards:

<script id="datagrepper-widget" src="https://apps.fedoraproject.org/datagrepper/widget.js?css=true" data-topic="org.fedoraproject.prod.fedbadges.badge.award" data-rows_per_page="40"> </script>

Please let me know in #fedora-apps on freenode if you have any questions (or if you find some cool use for it -- I love hearing that stuff).

Querying user activity

Mar 24, 2014 | categories: fedmsg, fedora, datagrepper View CommentsIn July, I wrote about some tools you can use to query Fedora package history. This post is just to point out that you can use the same approach to query user history. (It is the same data source that we use in Fedora Badges queries -- it also comes with a nice HTML output). Here's some example output from the console:

~❯ ./userwat ralph 2014-03-24T00:15:30 ralph submitted datagrepper-0.4.0-2.el6 to stable https://admin.fedoraproject.org/updates/datagrepper-0.4.0-2.el6 2014-03-24T00:15:28 ralph submitted python-fedbadges-0.4.1-1.el6 to stable https://admin.fedoraproject.org/updates/python-fedbadges-0.4.1-1.el6 2014-03-24T00:15:28 ralph submitted python-taskw-0.8.1-1.el6 to stable https://admin.fedoraproject.org/updates/python-taskw-0.8.1-1.el6 2014-03-24T00:15:27 ralph submitted python-tahrir-api-0.6.0-2.el6 to stable https://admin.fedoraproject.org/updates/python-tahrir-api-0.6.0-2.el6 2014-03-24T00:15:27 ralph submitted python-fedbadges-0.4.0-1.el6 to stable https://admin.fedoraproject.org/updates/python-fedbadges-0.4.0-1.el6 2014-03-23T13:51:16 ralph updated a ticket on the fedora-badges trac instance https://fedorahosted.org/fedora-badges/ticket/122 2014-03-21T17:08:21 ralph's packages.yml playbook run completed 2014-03-21T17:03:38 ralph started an ansible run of packages.yml 2014-03-21T16:31:48 ralph updated a ticket on the fedora-badges trac instance https://fedorahosted.org/fedora-badges/ticket/213 2014-03-21T15:09:10 ralph's python-bugzilla2fedmsg-0.1.3-1.el6 tagged into dist-6E-epel-testing by bodhi http://koji.fedoraproject.org/koji/taginfo?tagID=137

The tool isn't packaged at all, but here's the script if you'd like to copy and use it:

#!/usr/bin/env python """ userwat - A script to query a user's history from fedmsg. Author: Ralph Bean License: LGPLv2+ """ import datetime import requests import sys format_date = lambda stamp: datetime.datetime.fromtimestamp(stamp).isoformat() def make_request(user, page): response = requests.get( "https://apps.fedoraproject.org/datagrepper/raw", params=dict( meta=["subtitle", "link", "title"], start=1, user=[user], rows_per_page=100, page=page, ) ) return response.json() def main(user): results = make_request(user, page=1) for i in range(results['pages']): page = i + 1 results = make_request(user, page=page) for msg in results['raw_messages']: print format_date(msg['timestamp']), #print msg['meta']['title'], print msg['meta']['subtitle'], print msg['meta']['link'] if __name__ == '__main__': if len(sys.argv) != 2: print sys.argv print "Usage: userwat <FAS_USERNAME>" sys.exit(1) username = sys.argv[1] main(username)

Planet Spew and a Badge Bonanza

Dec 13, 2013 | categories: planet, datagrepper, fedora, badges View CommentsLast night, we found a cronjob in Fedora Infrastructure's puppet repo that had incorrect syntax. It had been broken since 2009:

"Let's fix it!" "Yeah!" "Fixing is always good."

The cronjob's job was to run tmpwatch on the Fedora Planet cache. When it ran for the first time since 2009, it nuked a lot of files.

- This caused the planet scraper to re-scrape a great many blog posts, also for the first time since 2009.

- This then caused the planet scraper to broadcast fedmsg messages indicating that new blog posts had been found (even though they were old ones).

- The badge awarder picked up these messages and awarded a wheelbarrow's worth of new blogging badges to almost everyone.

Take a look at the datagrepper history according to zodbot:

threebean │ .quote BDG zodbot │ BDG, fedbadges +1097.35% over yesterday zodbot │ ▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▆▆█▂▂▁▁▁ ⤆ over 24 hours zodbot │ ↑ 18:19 UTC 12/12 ↑ 18:19 UTC 12/13 threebean │ .quote PLN zodbot │ PLN, planet +69411.11% over yesterday zodbot │ ▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▃█▁▁▁▁▁▁▁ ⤆ over 24 hours zodbot │ ↑ 18:18 UTC 12/12 ↑ 18:18 UTC 12/13 ianweller │ lol

That's a lot of planet messages. :) Congratulations on your new blogging badges!

Next Page »