[three]Bean

fedmsg Middleware - Notifications in Every App?

Oct 29, 2012 | categories: python, fedmsg, fedora View CommentsI made this screencast demonstrating the concept of fedmsg middleware for notifications. "Inject a WebSocket connection on every page!"

As usual, if you want to get involved, hit me up in IRC on freenode in

#fedora-apps -- I'm threebean there.

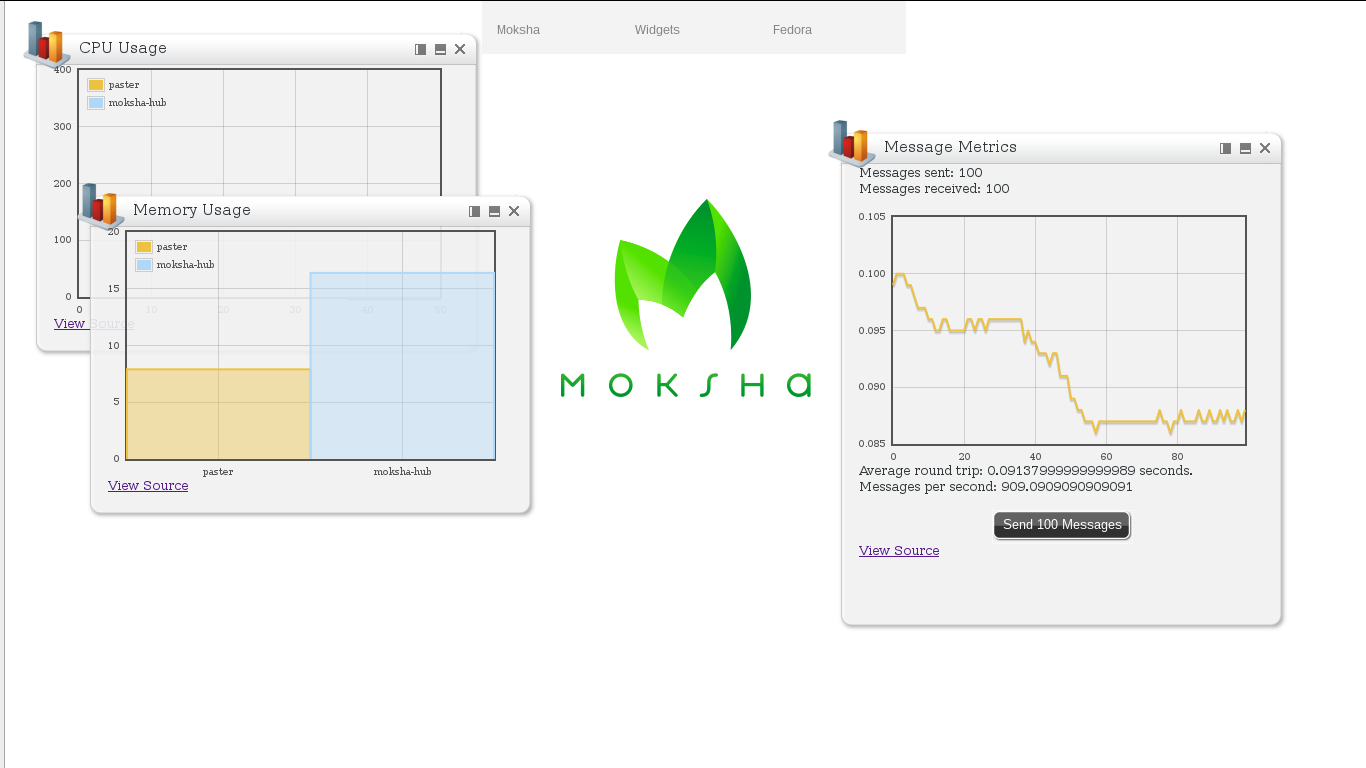

The Moksha Demo Dashboard

Oct 25, 2012 | categories: python, moksha, fedora View CommentsJust writing to show off how easy it is to stand up the moksha demo dashboard these days (it used to be kind of tricky).

First, install some system dependencies if you don't already have them:

sudo yum install zeromq zeromq-devel python-virtualenvwrapper

Open two terminals. In the first one run:

mkvirtualenv demo-dashboard pip install mdemos.server mdemos.menus mdemos.metrics wget https://raw.github.com/mokshaproject/mdemos.server/master/development.ini paster serve --reload development.ini

And in the second one run:

workon demo-dashboard moksha-hub

"Easy." Point your browser at http://localhost:8080/ for action.

p.s. -- In other news, I got fedmsg working with zeromq-3.2 in our staging infrastructure yesterday. It required this patch to python-txzmq That one aside, python-zmq and php-zmq "just worked" in epel-test. If you're writing zeromq code, you probably want to read this porting guide.

Fuzzing zeromq

Sep 18, 2012 | categories: python, zeromq, fedora View CommentsSo, my project for Fedora Infrastructure (fedmsg) connects around with zeromq. Way back in the Spring of this year, skvidal suggested that I "fuzz" it and see what happens, "fuzz" meaning try to cram random bits down its tubes.

I have a little pair of python scripts that I carry around in my ~/scratch/ dir to debug various zeromq situations. One of the scripts is topics_pub.py; it binds to an endpoint and publishes messages on topics. The other is topics_sub.py which connects to an endpoint and prints messages it receives to stdout.

To fuzz the subscriber, I had it connect to a garbage source built with /dev/random and netcat. In one terminal, I ran:

$ cat /dev/random | nc -l 3005

and in the other, I ran:

$ python topics_sub.py "tcp://*:3005"

... and nothing happened.

To fuzz the publisher, I hooked /dev/random up to telnet:

$ python topics_pub.py "tcp://127.0.0.1:3005" $ cat /dev/random | telnet 127.0.0.1 3005

... and it didn't fall over. Encouraging.

Moksha Tutorial - Live Graph of User Activity

Sep 17, 2012 | categories: python, pyramid, fedora, moksha View CommentsI've been working recently on sprucing up the fedmsg docs and the underlying Moksha docs. It seemed a natural extension of that work to put together some Moksha tutorials like this one.

Here I'll be showing you how to add a websocket-powered d3 graph of user activity to a Pyramid app using, as I said, Moksha.

Note

You can find the source for this tutorial on github.

Bootstrapping

Note

Bootstrapping here is almost exactly the same as in the Hello World tutorial. So if you've gone through that, this should be simple.

The exception is the new addition of a tw2.d3 dependency.

Set up a virtualenv and install Moksha and Pyramid (install virtualenvwrapper if you haven't already):

$ mkvirtualenv tutorial $ pip install pyramid $ pip install moksha.wsgi moksha.hub $ # tw2.d3 for our frontend component. $ pip install tw2.d3 $ # Also, install weberror for kicks. $ pip install weberror

Use pcreate to setup a Pyramid scaffold, install dependencies, and verify that its working. I like the alchemy scaffold, so we'll use that one. Its kind of silly, though: we won't be using a database or sqlalchemy at all for this tutorial:

$ pcreate -t alchemy tutorial $ cd tutorial/ $ rm production.ini # moksha-hub gets confused when this is present. $ python setup.py develop $ initialize_tutorial_db development.ini $ pserve --reload development.ini

Visit http://localhost:6543 to check it out. Success.

Enable ToscaWidgets2 and Moksha Middlewares

Note

Enabling the middleware here is identical to the Hello World tutorial.

Moksha is framework-agnostic, meaning that you can use it with TurboGears2, Pyramid, or Flask. It integrates with apps written against those frameworks by way of a layer of WSGI middleware you need to install. Moksha is pretty highly-dependent on ToscaWidgets2 which has its own middleware layer. You'll need to enable both, and in a particular order!

Go and edit development.ini. There should be a section at the top named [app:main]. Change that to [app:tutorial]. Then, just above the [server:main] section add the following three blocks:

[pipeline:main]

pipeline =

egg:WebError#evalerror

tw2

moksha

tutorial

[filter:tw2]

use = egg:tw2.core#middleware

[filter:moksha]

use = egg:moksha.wsgi#middleware

You now have three new pieces of WSGI middleware floating under your pyramid app. Neat! Restart pserve and check http://localhost:6543 to make sure its not crashing.

Provide some configuration for Moksha

Warning

This is where things begin to diverge from the Hello World tutorial.

We're going to configure moksha to communicate with zeromq and WebSocket. As an aside, one of Moksha's goals is to provide an abstraction over different messaging transports. It can speak zeromq, AMQP, and STOMP on the backend, and WebSocket or COMET emulated-AMQP and/or STOMP on the frontend.

We need to configure a number of things:

- Your app needs to know how to talk to the moksha-hub with zeromq.

- Your clients need to know where to find their websocket server (its housed inside the moksha-hub).

Edit development.ini and add the following lines in the [app:tutorial] section. Do it just under the sqlalchemy.url line:

##moksha.domain = live.example.com moksha.domain = localhost moksha.notifications = True moksha.socket.notify = True moksha.livesocket = True moksha.livesocket.backend = websocket #moksha.livesocket.reconnect_interval = 5000 moksha.livesocket.websocket.port = 9998 zmq_enabled = True #zmq_strict = True zmq_publish_endpoints = tcp://*:3001 zmq_subscribe_endpoints = tcp://127.0.0.1:3000,tcp://127.0.0.1:3001

Also, add a new hub-config.ini file with the following (nearly identical) content. Notice that the only real different is the value of zmq_publish_endpoints:

[app:tutorial] ##moksha.domain = live.example.com moksha.domain = localhost moksha.livesocket = True moksha.livesocket.backend = websocket moksha.livesocket.websocket.port = 9998 zmq_enabled = True #zmq_strict = True zmq_publish_endpoints = tcp://*:3000 zmq_subscribe_endpoints = tcp://127.0.0.1:3000,tcp://127.0.0.1:3001

Emitting events when users make requests

This is the one tiny little nugget of "business logic" we're going to add. When a user anywhere makes a Request on our app, we want to emit a message that can then be viewed in graphs by other users. Pretty simple: we'll just emit a message on a topic we hardcode that has an empty dict for its body.

Add a new file, tutorial/events.py with the following content:

from pyramid.events import NewRequest from pyramid.events import subscriber from moksha.hub.hub import MokshaHub hub = None def hub_factory(config): global hub if not hub: hub = MokshaHub(config) return hub @subscriber(NewRequest) def emit_message(event): """ For every request made of our app, emit a message to the moksha-hub. Given the config from the tutorial, this will go out on port 3001. """ hub = hub_factory(event.request.registry.settings) hub.send_message(topic="tutorial.newrequest", message={})

Combining components to make a live widget

With those messages now being emitted to the "tutorial.newrequest" topic, we can construct a frontend widget with ToscaWidgets2 that listens to that topic (using a Moksha LiveWidget mixin). When a message is received on the client the javascript contained in onmessage will be executed (and passed a json object of the message body). We'll ignore that since its empty, and just increment a counter provided by tw2.d3.

Add a new file tutorial/widgets.py with the following content:

from tw2.d3 import TimeSeriesChart from moksha.wsgi.widgets.api.live import LiveWidget class UsersChart(TimeSeriesChart, LiveWidget): id = 'users-chart' topic = "tutorial.newrequest" onmessage = """ tw2.store['${id}'].value++; """ width = 800 height = 150 # Keep this many data points n = 200 # Initialize to n zeros data = [0] * n def get_time_series_widget(config): return UsersChart( backend=config.get('moksha.livesocket.backend', 'websocket') )

Rendering Moksha Frontend Components

With our widget defined, we'll need to expose it to our chameleon template and render it. Instead of doing this per-view like you might normally, we're going to flex Pyramid's events system some more and inject it (and the requisite moksha_socket widget) on every page.

Go back to tutorial/events.py and add the following new handler:

from pyramid.events import BeforeRender from pyramid.threadlocal import get_current_request from moksha.wsgi.widgets.api import get_moksha_socket from tutorial.widgets import get_time_series_widget @subscriber(BeforeRender) def inject_globals(event): """ Before templates are rendered, make moksha front-end resources available in the template context. """ request = get_current_request() # Expose these as global attrs for our templates event['users_widget'] = get_time_series_widget(request.registry.settings) event['moksha_socket'] = get_moksha_socket(request.registry.settings)

And lastly, go edit tutorial/templates/mytemplate.pt so that it displays users_widget and moksha_socket on the page:

<div id="bottom">

<div class="bottom">

<div tal:content="structure users_widget.display()"></div>

<div tal:content="structure moksha_socket.display()"></div>

</div>

</div>

Running the Hub alongside pserve

When the moksha-hub process starts up, it will begin handling your messages. It also houses a small websocket server that the moksha_socket will try to connect back to.

Open up two terminals and activate your virtualenv in both with workon tutorial. In one of them, run:

$ moksha-hub -v hub-config.ini

And in the other run:

$ pserve --reload development.ini

Now open up two browsers, (say.. one chrome, the other firefox) and visit http://localhost:6543/ in both. In one of them, reload the page over and over again.. you should see the graph in the other one "spike" showing a count of all the requests issued.

atexit for threads

Aug 17, 2012 | categories: python, fedora View CommentsI ran into some wild stuff the other day while working on fedmsg for Fedora Infrastructure (FI).

tl;dr -> atexit won't fire for threads. Objects stored in an instance of threading.local do get reliably cleaned up, though. Implementing __del__ on an object there seems to be a sufficient replacement for atexit in a multi-threaded environment.

Some Context -- The goal of fedmsg is to link all the services in FI together with a message bus. We're using python (naturally) and zeromq. lmacken's Moksha is in the mix which lets us swap out zeromq for AMQP or STOMP if we like.. (we need AMQP in order to get messages from bugzilla, for instance).

We actually have a first iteration running in production now. You can see reprs of the live messages if you hop into #fedora-fedmsg on freenode.

One of the secondary goals of fedmsg (and the decisive one for this blog post) is to be as simple as possible to invoke. If we make sure that our data models have __json__ methods, then invocation can look like:

import fedmsg fedmsg.publish(topic='event', msg=my_obj)

fedmsg does lots of "neat" stuff here. It:

- Creates a zeromq context and socket as a singleton to keep around.

- Changes the topic to org.fedoraproject.blog.event.

- Converts my_obj to a dict by calling __json__ if necessary.

- Cryptographically signs it if the config file says to do so.

- Sends it out.

The flipside of simplying the API is that we offloaded a lot of complexity to the configuration in /etc/fedmsg.d/. i.e., what port? where are the certificates? Nonetheless, I'm pretty happy with it.

The Snag -- fedmsg played nicely in multi-process environments (say, mod_wsgi). Each process would pick a different port and nobody stomped on each others resources. It wasn't until last week that we stumbled into a use case that required a multi-threaded environment. Threads fought over the zeromq socket for their process: sadness.

Solution: Hang my singleton wrapper on threading.local! I wrote some "integration-y" tests and it worked like a charm.

The last problem was cleanup. I had hitherto been using atexit.register to cleanup the zeromq context and sockets at the end of a process, but it wasn't been called when threads finished up. What to do?

First level of evil: I dove into the source of CPython (for the first time) looking for thread exit hooks. No luck.

Second level of evil: I dove into inspect. Could I rewrite the call stack and inject fedmsg cleanup code at the end of my topmost parent's frame? No luck (CPython told me "read-only").

The Solution -- Something, somewhere is either responsibly cleaning up or calling del on threading.local's members.

I had simply to add a __del__ method to my main FedmsgContext class and everything fell into place at threads' end.

« Previous Page -- Next Page »